During today's WWDC 2025 Keynote, Apple introduced a ton of new Apple Intelligence features. One of the standout features include an update to Visual Intelligence, which can now perform actions from an iPhone's screen.

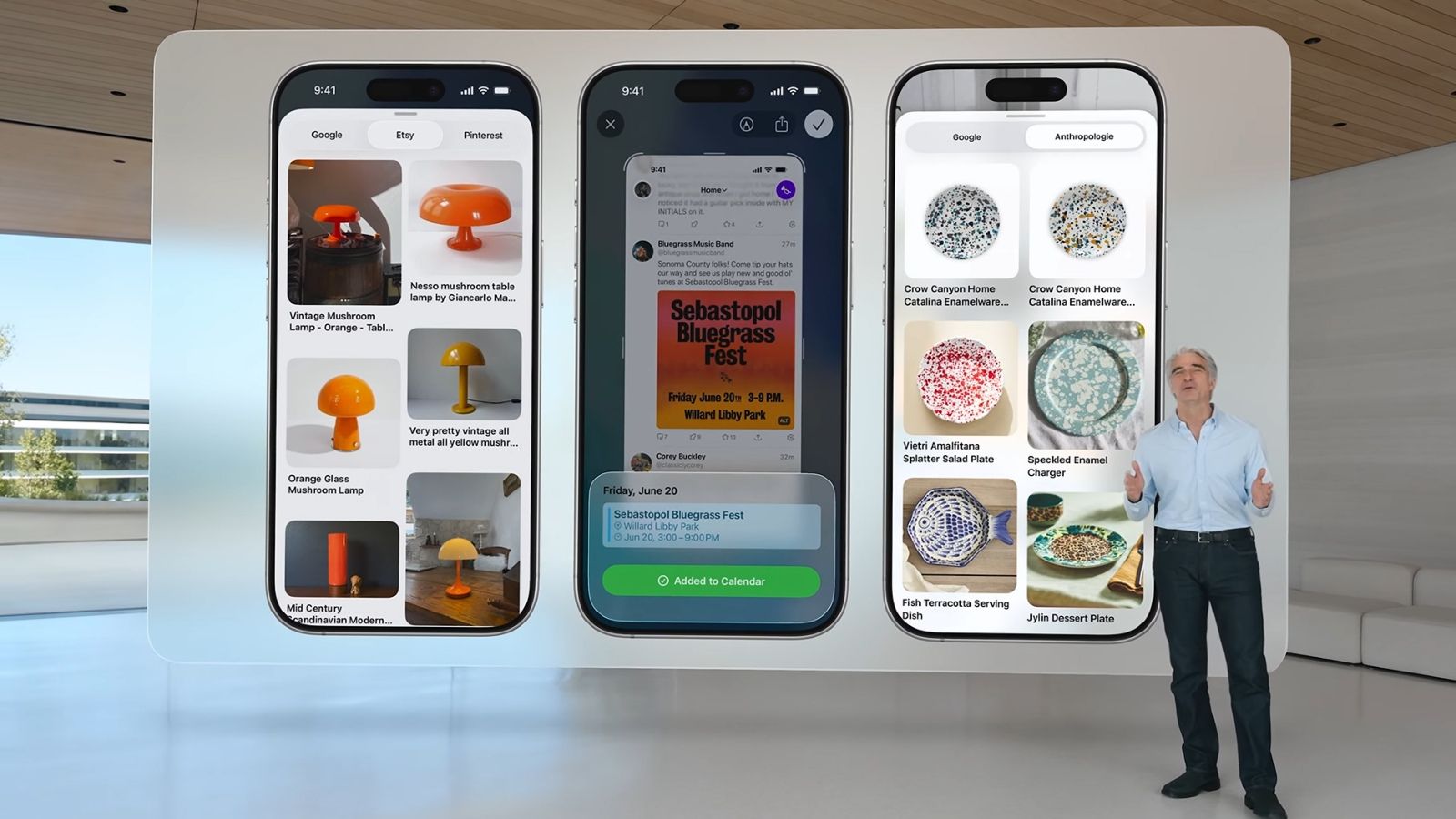

With the new Visual Intelligence feature on iOS 26, users will be able to "search and take action on anything" that's visible on their iPhone screen. For instance, users can ask ChatGPT to describe what's on their screen to find out more about objects or places that they're looking at. Apple says this feature will also work on Google, Etsy, or other supported apps and help users find relevant images and similar products.

If a user is interested in a product on their screen, they'll be able to "search for that specific item or similar objects online", similar to how Circle to Search works on Android. Similarly, if a user is looking at events or meeting schedules on their screen, Visual intelligence will be able to recognise it and suggest them to add the event to their calendar. The feature will utilise Apple Intelligence which will then identify key details like the date, time, and location for the event to add to their calendar.

To use the new on-screen actions, users can press the Volume Up and Power buttons (same as a screenshot) and choose to explore more with visual intelligence.

The new feature is available for testing through the Apple Developer Program, starting today. It's important to note that this, being an Apple Intelligence-powered feature, will need users to own iPhone 15 Pro, iPhone 15 Pro Max or any iPhone 16 models and run iOS 26 Developer Beta.